Korean company's new smartphone, positioned as rival to Apple's iPhone, will also be able to translate nine languages

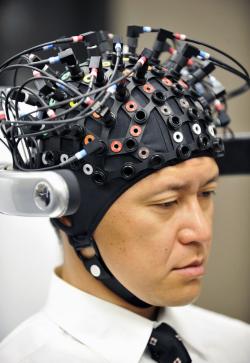

The Galaxy S4 launched by Samsung in New York has a 'smart scroll and smart pause' feature that monitors users' eye movements. Photograph: Adrees Latif/Reuters

Korea's Samsung turned to song and dance on Thursday as it took a shot at ousting Apple as king of the smartphone.

In a packed Radio City Music Hall in Manhattan, Samsung unveiled the Galaxy S4, the latest iteration of its best-selling smartphone, and set out its challenge to Apple on the US giant's own turf.

In a widely anticipated move, the company unveiled the most eye-catching feature of the new phone. It has pioneered the "smart scroll and smart pause" feature. The facing camera on the handset monitors users' eye movements and behaves accordingly. Tilting the phone while looking at it will scroll web pages and it can even pause a video if a user looks away.

After weeks of teasing, Samsung unveiled a phone it is promoting as "moving beyond touch". With a simple wave of the hand, Minority Report-style, the phone will move a web page or a photograph.

The handset can also translate nine languages, from text to speech and vice versa or just translate text. JK Shin, Samsung's head of mobile, said: "Think about being able to communicate with your friends around the world without language barriers." He called the device "a life companion for a richer, simpler life".

Samsung said that from the end of next month the Galaxy S4 would be offered by 327 mobile operators in 125 countries.

Samsung's Galaxy S4.

Samsung's Galaxy S4.

Photograph: Andrew Gombert/EPA

The show-like unveiling featured tap-dancing children to illustrate the Galaxy's 13 megapixel camera, which can record the user as well as his or her subject. Other mobiles offer similar functions.

Michael Gartenberg, analyst at Gartner, gave the handset a cautious welcome: "Features are impressive but a lot of them feel gratuitous. Also a lot of features available for other Android devices through 3rd party apps," he wrote on Twitter.

While detailed reviews are yet to come, analysts said it was clear from the scale of Samsung's launch that the Korean firm has Apple firmly in its sights.

Forrester analyst Charles Golvin said Samsung has no intention of playing Pepsi to Apple's Coca-Cola. "Companies like Pepsi and Avis to some extent played up being number two, it was a point of difference. Samsung wants to be number one. In fact, it's saying if you think Apple is number one, you are wrong."

Golvin said Samsung still trailed Apple in the US smartphone market. "Apple has about 35% and Samsung around 16-17%," he said. But Samsung's overall share was far higher and the market is changing.

Six years after Steve Jobs unveiled the first iPhone, smartphone makers are chasing two very different markets. "There's the upgrade market for those who already have one; those tend to be more well-heeled buyers. And there's new buyers – that tend to be more financially constrained," said Golvin.

Samsung's new Galaxy is firmly aimed at the former and the company has a tight grip on the new, less moneyed buyers too. That strategy has presented Apple with its biggest challenge in smartphones yet.

Carolina Milanesi, consumer devices analyst at Gartner, said: "We are at the point where the majority of sales in this segment come from replacement, not new users. In other words, the addressable market is starting to be saturated and now it is about keeping their refresh cycle short. The problem is, though, that technology innovation is slowing down and as we move to more innovation being delivered via software, the cycles are getting longer rather than shorter." She pointed to Nintendo's Wii U games console, launched last November. In January, Nintendo cut its sales forecast by 27%.

Colin Gillis, technology analyst at BGC Partners, said the smartphone war was turning into a two-horse race. "These guys make 120% of the profits because everyone else is losing money," he said.

But Gillis said that if Samsung was really going to beat Apple at its own game, it would need to keep innovating. "Generating buzz is a great thing to do provided your products are worthy of it. This market is exploding. They will sell 10m of these things out of the gate. But it's not a one player market, they will have to really deliver."

Harry Wang, analyst at Parks Associates, said: "Samsung is trying to do one thing: knock Apple's status in the US. They want to show that they can excite the high-end smartphone adopters just as well as Apple."

Wang said Samsung already had an advantage in the Far East where its brand is better known than Apple but it is now clearly after US consumers. "They are catching up. Samsung is now a formidable brand."

He said the company had done a good job of distancing itself both from Apple and its Android competitors. Samsung spent $410m promoting Galaxy in the US last year, according to a report from Kantar Media, more than Apple, which spent $333m on iPhone ads in 2012.

Samsung used a series of ads to mock iPhone buyers for waiting in long lines and to position Galaxy as a smarter, hipper alternative. But Apple edged Samsung out in sales at the end of 2012. The iPhone 5 was launched last September and was initially marred by backlash over the decision to drop Google Maps for Apple's own, flawed, maps programme. It went on to be the most popular phone in the US during the fourth quarter of 2012. Apple sold 17.7m smartphones during the quarter while Samsung sold 16.8m mobile phones, according to Strategy Analytics' Wireless Device Strategy report. The boost made Apple the US's number one mobile phone vendor for the first time ever, with a record 34% market share.

In a packed Radio City Music Hall in Manhattan, Samsung unveiled the Galaxy S4, the latest iteration of its best-selling smartphone, and set out its challenge to Apple on the US giant's own turf.

In a widely anticipated move, the company unveiled the most eye-catching feature of the new phone. It has pioneered the "smart scroll and smart pause" feature. The facing camera on the handset monitors users' eye movements and behaves accordingly. Tilting the phone while looking at it will scroll web pages and it can even pause a video if a user looks away.

After weeks of teasing, Samsung unveiled a phone it is promoting as "moving beyond touch". With a simple wave of the hand, Minority Report-style, the phone will move a web page or a photograph.

The handset can also translate nine languages, from text to speech and vice versa or just translate text. JK Shin, Samsung's head of mobile, said: "Think about being able to communicate with your friends around the world without language barriers." He called the device "a life companion for a richer, simpler life".

Samsung said that from the end of next month the Galaxy S4 would be offered by 327 mobile operators in 125 countries.

Samsung's Galaxy S4.

Samsung's Galaxy S4. Photograph: Andrew Gombert/EPA

The show-like unveiling featured tap-dancing children to illustrate the Galaxy's 13 megapixel camera, which can record the user as well as his or her subject. Other mobiles offer similar functions.

Michael Gartenberg, analyst at Gartner, gave the handset a cautious welcome: "Features are impressive but a lot of them feel gratuitous. Also a lot of features available for other Android devices through 3rd party apps," he wrote on Twitter.

While detailed reviews are yet to come, analysts said it was clear from the scale of Samsung's launch that the Korean firm has Apple firmly in its sights.

Forrester analyst Charles Golvin said Samsung has no intention of playing Pepsi to Apple's Coca-Cola. "Companies like Pepsi and Avis to some extent played up being number two, it was a point of difference. Samsung wants to be number one. In fact, it's saying if you think Apple is number one, you are wrong."

Golvin said Samsung still trailed Apple in the US smartphone market. "Apple has about 35% and Samsung around 16-17%," he said. But Samsung's overall share was far higher and the market is changing.

Six years after Steve Jobs unveiled the first iPhone, smartphone makers are chasing two very different markets. "There's the upgrade market for those who already have one; those tend to be more well-heeled buyers. And there's new buyers – that tend to be more financially constrained," said Golvin.

Samsung's new Galaxy is firmly aimed at the former and the company has a tight grip on the new, less moneyed buyers too. That strategy has presented Apple with its biggest challenge in smartphones yet.

Carolina Milanesi, consumer devices analyst at Gartner, said: "We are at the point where the majority of sales in this segment come from replacement, not new users. In other words, the addressable market is starting to be saturated and now it is about keeping their refresh cycle short. The problem is, though, that technology innovation is slowing down and as we move to more innovation being delivered via software, the cycles are getting longer rather than shorter." She pointed to Nintendo's Wii U games console, launched last November. In January, Nintendo cut its sales forecast by 27%.

Colin Gillis, technology analyst at BGC Partners, said the smartphone war was turning into a two-horse race. "These guys make 120% of the profits because everyone else is losing money," he said.

But Gillis said that if Samsung was really going to beat Apple at its own game, it would need to keep innovating. "Generating buzz is a great thing to do provided your products are worthy of it. This market is exploding. They will sell 10m of these things out of the gate. But it's not a one player market, they will have to really deliver."

Harry Wang, analyst at Parks Associates, said: "Samsung is trying to do one thing: knock Apple's status in the US. They want to show that they can excite the high-end smartphone adopters just as well as Apple."

Wang said Samsung already had an advantage in the Far East where its brand is better known than Apple but it is now clearly after US consumers. "They are catching up. Samsung is now a formidable brand."

He said the company had done a good job of distancing itself both from Apple and its Android competitors. Samsung spent $410m promoting Galaxy in the US last year, according to a report from Kantar Media, more than Apple, which spent $333m on iPhone ads in 2012.

Samsung used a series of ads to mock iPhone buyers for waiting in long lines and to position Galaxy as a smarter, hipper alternative. But Apple edged Samsung out in sales at the end of 2012. The iPhone 5 was launched last September and was initially marred by backlash over the decision to drop Google Maps for Apple's own, flawed, maps programme. It went on to be the most popular phone in the US during the fourth quarter of 2012. Apple sold 17.7m smartphones during the quarter while Samsung sold 16.8m mobile phones, according to Strategy Analytics' Wireless Device Strategy report. The boost made Apple the US's number one mobile phone vendor for the first time ever, with a record 34% market share.